Fans of soccer/football have been left bereft of their prime form ofentertainment these past few months and I’ve seen a huge uptick in theamount of casual fans and bloggers turning to learning programminglanguages such as R or Python to augment their analytical toolkits. Freeand easily accessible data can be hard to find if you only just starteddown this path and even when you do, you’ll find that eventuallydragging your mouse around and copying stuff into Excel just isn’t timeefficient or possible. The solution to this is web scraping! However, Ifeel like a lot of people aren’t aware of the ethical conundrumssurrounding web scraping (especially if you’re coming from outside of adata science/programming/etc. background …and even if you are I mightadd). I am by no means an expert but since I started learning aboutit all I’ve tried to “web scrape responsibly” and this tenet will beemphasized throughout this blog post. I will be going over examples toscrape soccer data fromWikipedia,soccerway.com, andtransfermarkt.com. Do note this isfocused on the web-scraping part and won’t cover the visualization,links to the viz code will be given at the end of each section and youcan always check out mysoccer_ggplot Github repofor more soccer viz goodness!

Anyway, let’s get started!

GitHub - nadireag/web-scraping-challenge Web Scraping - Mission to Mars Build a web application that scrapes various websites for data related to the Mission to. GitHub Gist: instantly share code, notes, and snippets.

- Web scraping, or web harvesting, is the term we use to describe the process of extracting data from a website. The reason we can do this is because the information used by a browser to render webpages is received as a text file from a server.

- Have fun doing web scraping in CL! More helpful libraries: we could use VCR, a store and replay utility to set up repeatable tests or to speed up a bit our experiments in the REPL. Cl-async, carrier and others network, parallelism and concurrency libraries to see on the awesome-cl list, Cliki or Quickdocs. Page source: web-scraping.md.

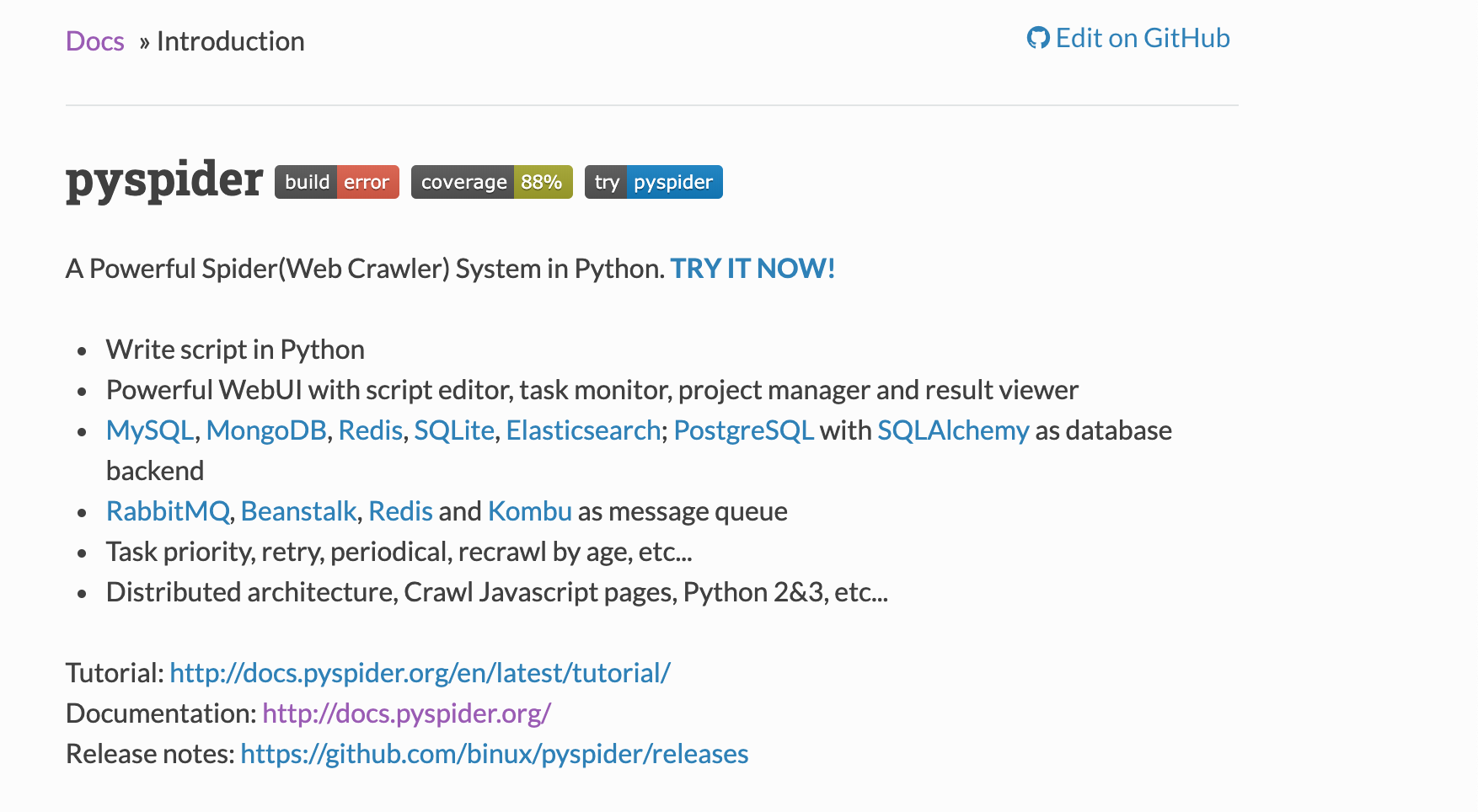

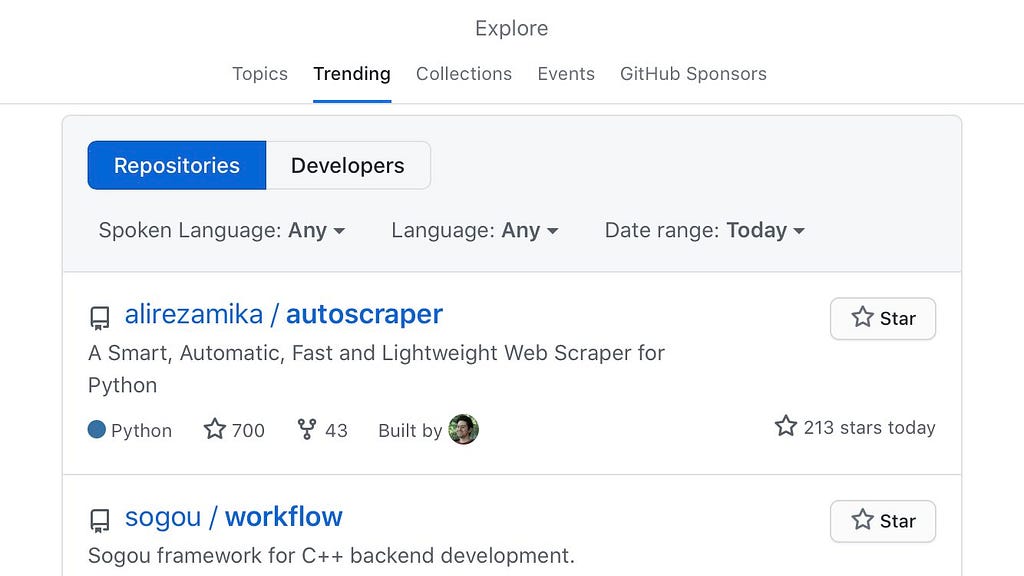

- GitHub is where people build software. More than 56 million people use GitHub to discover, fork, and contribute to over 100 million projects.

When we think about R and web scraping, we normally just think straightto loading {rvest} and going right on our merry way. However, thereare quite a lot of things you should know about web scraping practicesbefore you start diving in. “Just because you can, doesn’t mean youshould.” robots.txt is a file in websites that describe thepermissions/access privileges for any bots and crawlers that come acrossthe site. Certain parts of the website may not be accessible for certainbots (say Twitter or Google), some may not be available at all, and inthe most extreme case, web scraping may even be prohibited. However, donote that just because there is no robots.txt file or that it ispermissive of web scraping does not automatically mean you areallowed to scrape. You should always check the website’s “Terms ofUse” or similar pages.

Web scraping takes up bandwidth for a host, especially if it houses lotsof data. So writing web scraping bots and functions that are polite andrespectful of the hosting site is necessary so that we don’tinconvenience websites that are doing us a service by making thedata available for us for free! There’s a lot of things we take forgranted, especially regarding free soccer data, so let’s make sure wecan keep it that way.

In R there are a number of different packages that facilitatesresponsible web scraping packages, including:

{robotstxt} is a packagecreated by Peter Meissner andprovides functions to parse

robots.txtfiles in a clean way.{ratelimitr} created byTarak Shah provides ways to limit therate which functions are called. You can define a certain

ncallsperperiodof time to any function wrapped inratelimitr::limit_rate().

The {polite} package takes a lot ofthe things previously mentioned into one neat package that flowsseamlessly with the {rvest} API. I’ve been using this package almostsince its first release and it’s terrific! I got to see the packageauthor (Dmytro Perepolkin) do apresentation on it at UseR2019 you canfind the video recordinghere.This blog post will mainly focus on using {rvest} in combination withthe {polite} package.

For the first example, let’s start with scraping soccer data fromWikipedia, specifically the top goal scorers of the Asian Cup.

We use polite::bow() to pass the URL for the Wikipedia article to geta polite session object. This object will tell you about therobots.txt, the recommended crawl delay between scraping attempts, andtells you whether you are allowed to scrape this URL or not. You canalso add your own user name in the user_agent argument to introduceyourself to the website.

Of course, just to make sure, remember to read the “Terms of Use” pageas well. When it comes to Wikipedia though, you could just download allof Wikipedia’sdatayourself and do a text-search through those files but that’sout-of-scope for this blog post, maybe another time!

Now to actually get the data from the webpage. You’ve got differentoptions depending on what browser you’re using but on Google Chrome orMozilla Firefox you can find the exact HTML element by right clicking onit and then clicking on “Inspect” or “Inspect Element” in the pop-upmenu. By doing so, a new view will open up showing you the full HTMLcontent of the webpage with the element you chose highlighted. (See first two pics)

You might also want to try using a handy JavaScript tool called SelectorGadget,you can learn how to use ithere. Itallows you to click on different elements of the web page andthe gadget will try to ascertain the exact CSS Selector in the HTML. (See bottom pic)

Do be warned that web pages can change suddenly and the CSS Selector youused in the past might not work anymore. I’ve had this happen more than a few timesas pages get updated with more info from new tournaments and such. Thisis why you really should try to scrape from a more stable website, but alot of times for “simple” data Wikipedia is the easiest and best placeto scrape.

From here you can right-click again on the highlighted HTML code to“Copy”, and then you can choose one of “CSS Selector”, “CSS Path”, or“XPath”. I normally use “CSS Selector” and it will be the one I will usethroughout this tutorial. This is the exact reference within the HTMLcode of the webpage of the object you want. I make sure to choose theCSS Selector for the table itself and not just the info inside thetable.

With this copied, you can go to your R script/RMD/etc. After running thepolite::scrape() function on your bow object, paste in the CSSSelector/Path/XPath you just copied into html_nodes(). The bowobject already has the recommended scrape delay as stipulated in awebsite’s robots.txt so you don’t have to input it manually when youscrape.

Github Web Scraping Mission To Mars

Grabbing a HTML table is the easiest way to get data as you usuallydon’t have to do too much work to reshape the data afterwards. We can dothat with the html_table() function. As the HTML object returns as alist, we have to flatten it out one level using purrr::flatten_df() .Finish cleaning it up by taking out the unnecessary “Ref” column withselect() and renaming the column names with set_names().

After adding some flag and soccer ball images to the data.frame we getthis:

Do note that the image itself is from before the 2019 Asian Cup butthe data we scraped in the code above is updated. As a visualizationchallenge try to create a similar viz with the updated data! You cantake a look at my Asian Cup 2019 blogpost for howI did it. Alternatively you can try doing the same as above except withtheEuros.Try grabbing the top goal scorer table from that page and make your owngraph!

So now let’s try a soccer-specific website as that’s really the goal ofthis blog post. This time we’ll go for one of the most famous soccerwebsites around, transfermarkt.com. A website used as a data sourcefrom your humble footy blogger to big news sites such as the FinancialTimes andthe BBC.

The example we’ll try is from an Age-Value graph for the J-League I madearound 2 years ago when I just started doing soccer data viz (how timesflies…).

The basic steps are the same as before but I’ve found that it can bequite tricky to find the right nodes on transfermarkt even with theCSS Selector Gadget or other methods we described in previous sections.After a while you’ll get used to the quirks of how the website isstructured and know what certain assets (tables, columns, images) arecalled easily. This is a website where the SelectorGadget really comesin handy!

This time around I won’t be grabbing an entire table like I did withWikipedia but a number of elements from the webpage. You definitelycan scrape for the table like I showed above with html_table() butin this case I didn’t because the table output was rather messy, gave meway more info than I actually needed, and I wasn’t very good atregex/stringr to clean the text 2 years ago. Try doing it the way belowand also by grabbing the entire table for more practice.

The way I did it back then also works out for this blog post because Ican show you a few other html_*() {rvest} functions:

html_table(): Get data from a HTML tablehtml_text(): Extract text from HTMLhtml_attr(): Extract attributes from HTML ('src'for imagefilename,'href'for URL link address)

With each element collected we can put them into a list and reshape itinto a nice data frame.

With some more cleaning and {ggplot2} magic (seehere,start from line 53) you will then get:

Some other examples by scraping single web pages:

- transfermarkt: simple age-utility plot from2018

The previous examples looked at scraping from a single web page butusually you want to collect data for each team in a league, each playerfrom each team, or each player from each team in every league, etc. Thisis where the added complexity of web-scraping multiple pages comes in.The most efficient way is to be able to programatically scrape acrossmultiple pages in one go instead of running the same scraping functionon different teams’/players’ URL link over and over again.

- Understand the website structure: How it organizes its pages, checkout what the CSS Selector/XPaths are like, etc.

- Get a list of links: Team page links from league page, player pagelinks from team page, etc.

- Create your own R functions: Pinpoint exactly what you want toscrape as well as some cleaning steps post-scraping in one functionor multiple functions.

- Start small, then scale up: Test your scraping function on oneplayer/team, then do entire team/league.

- Iterate over a set of URL links: Use {purrr},

forloops,lapply()(whatever your preference).

Look at the URL link for each web page you want to gather. What are thesimilarities? What are the differences? If it’s a proper website thanthe web page for a certain data view for each team should be exactly thesame, as you’d expect it to contain exactly the same type of info justfor a different team. For this example each “squad view” page for eachPremier League team on soccerway.com are structured similarly:“https://us.soccerway.com/teams/england/”and then the “team name/”, the “team number/” and finally the name ofthe web page, “squad/”. So what we need to do here is to find out the“team name” and “team number” for each of the teams and store them. Wecan then feed each pair of these values in one at a time to scrape theinformation for each team.

To find these elements we could just click on the link for each team andjot them down … but wait we can just scrape those too! We use thehtml_attr() function to grab the “href” part of the HTML, whichcontains the hyperlink of that element. The left picture is looking atthe URL link of one of the buttons to a team’s page via “Inspect”. Theright picture is selecting every team’s link via the SelectorGadget.

The URL given in the href of the HTML for the team buttonsunfortunately aren’t the full URL needed to access these pages. Sowe have to cut out the important bits and re-create them ourselves. Wecan use the {glue} package to combine the “team_name” and “team_num”for each team in the incomplete URL into a complete URL in a new columnwe’ll call link.

Fantastic! Now we have the proper URL links for each team. Next we haveto actually look into one of the web pages itself to figure out whatexactly we need to scrape from the web page. This assumes that each webpage and the CSS Selector for the various elements we want to grab arethe same for every team. As this is for a very simple goal contributionplot all we need to gather from each team’s page is the “player name”,“number of goals”, and “number of assists”. Use the Inspect element orthe SelectorGadget tool to grab the HTML code for those stats.

Below, I’ve split each into its own mini-scraper function. When you’reworking on this part, you should try to use the URL link from one teamand build your scraper functions from that link (I usually use Liverpoolas my test example when scraping Premier League teams). Note that allthree of the mini-functions below could just be chucked into one largefunction but I like keeping things compartmentalized.

Now that we have scrapers for each stat, we can combine these into alarger function that will then gather them all up into a nice data framefor each team that we want to scrape. If you input any one of the teamURLs from team_links_df, it will collect the “player name”, “number ofgoals”, and “number of assists” for that team.

Iteration Over a Set of Links

OK, so now we have a function that can scrape the data for ONE teambut it would be extremely ponderous to re-run it another NINETEEN timesfor all the other teams… so what can we do? This is where thepurrr::map() family of functions and iteration comes in! The map()family of functions allows you to apply a function (an existing one froma package or one that you’ve created yourself) to each element of a listor vector that you pass as an argument to the mapping function. For our purposes, thismeans we can use mapping functions to pass along a list of URLs (forwhatever number of players and/or teams) along with a scraping functionso that it scrapes it altogether in one go.

In addition, we can use purrr::safely() to wrap any function(including custom made ones). This makes these functions return a listwith the components result and error. This is extremely useful fordebugging complicated functions as the function won’t just error out andgive you nothing, but at least the result of the parts of the functionthat worked in result with what didn’t work in error.

So for example, say you are scraping data from the webpage of each teamin the Premier League (by iterating a single scraping function over eachteams’ web page) and by some weird quirk in the HTML of the web page orin your code, the data from one team errors out (while the other 19teams’ data are gathered without problems). Normally, this will mean thedata you gathered from all other web pages that did workwon’t be returned, which can be extremely frustrating. With asafely() wrapped function, the data from the 19 teams’ data that thefunction was able to scrape is returned in result component of thelist object while the one errored team and error message is returned inthe error component. This makes it very easy to debug when you knowexactly which iteration of the function failed.

We already have a nice list of team URL links in the data frameteam_links_df, specifically in the “link” column(team_links_df$link). So we pass that along as an argument to map2()(which is just a version of map() but for two argument inputs) and ourpremier_stats_info() function so that the function will be applied toeach team’s URL link. This part may take a while depending on yourinternet connection and/or if you put a large value for the crawl delay.

As you can see (the results/errors for the first four teams scraped),for each team there is a list holding a “result” and “error” element.For the first four, at least, it looks like everything was scrapedproperly into a nice data.frame. We can check if any of the twenty teamshad an error by purrr::discard()-ing any elements of the list thatcome out as NULL and seeing if there’s anything left.

It comes out as a empty list which means were no errors in the “error”elements. Now we can squish and combine individual team data.frames intoone data.frame using dplyr::bind_rows().

With that we can clean the data a bit and finally get on to the plotting! You can find the code in the originalgistto see how I created the plot below. I really would like to go intodetail especially as I use one of my favorite plotting packages,{ggforce}, here but it deserves its own separate blog post. NOTE: The data we scraped in the above section is for this season (2019-2020) so the annotations won’t be the same as in the originalgist which is for 2018-2019. Try to play around with the different annotations options in R as practice!

As you can see, this one was for the 2018-2019 season. I made a similarone but using xG per 90 and xA per 90 for the 2019-2020 season (asper January 1st, 2020 at least) using FBRef datahere. You can find thecode for ithere.However, I did not web scrape it as from their Terms ofUse page, FBRef (orany of the SportsRef websites) do not allow web scraping(“spidering”, “robots”). Thankfully, they make it very easy toaccess their data as downloadable .csv files by just clicking on a fewbuttons, so getting their data isn’t really a problem!

For practice, try doing it for a different season or for a differentleague altogether!

For other examples of scraping multiple pages:

- transfermarkt: (Opta-inspired Age-Utility plot from February 28,2020)

This blog post went over web-scraping, focusing on getting soccer datafrom soccer websites in a responsibly fashion. After a brief overview ofresponsible scraping practices with R I went over several examples ofgetting soccer data from various websites. I make no claims that its themost efficient way, but importantly, it gets the job done and in apolite way. More industrial-scale scraping over hundreds and thousandsof web pages is a bit out of scope for an introductory blog post andit’s not something I’ve really done either, so I will pass along thetorch to someone else who wants to write about that. There are otherways to scrape websites using R, especially websites that have dynamicweb pages, using R Selenium,Headless Chrome (crrri), and othertools.

In regards to FBRef, as it is now a really popularwebsite to use (especially with their partnership with StatsBomb), thereis a blog post out there detailing a way of using R Selenium to get aroundthe terms stipulated and the reasoning seems OK but I am still not 100%sure. This goes again into how a lot of web scraping can be in a rathergrey area at times, as for all the clear warnings on some websites youhave a lot more ambiguity and ability to use some expedientinterpretation in others. At the end of the day, you just have to doyour due diligence, ask permission directly if possible, and be{polite} about it.

Some other web-scraping tutorials you might be interested in:

As always, you can find more of my soccer-related stuff on this websiteor on soccer_ggplots Githubrepo!

Happy (responsible) Web-scraping!

Tutorial

Writing Scrapers

The core of a pjscrape script is the definition of one or more scraper functions. Here's what you need to know:

Scraper functions are evaluated in a full browser context. This means you not only have access to the DOM, you have access to Javascript variables and functions, AJAX-loaded content, etc.

Scraper functions are evaluated in a sandbox (read more here). Closures will not work the way you think:

The best way to think about your scraper functions is to assume the code is being

eval()'d in the context of the page you're trying to scrape.Scrapers have access to a set of helper functions in the

_pjsnamespace. See the Javascript API docs for more info. One particularly useful function is_pjs.getText(), which returns an array of text from the matched elements:For this instance, there's actually a shorter syntax - if your scraper is a string instead of a function, pjscrape will assume it is a selector and use it in a function like the one above:

Scrapers can return data in whatever format you want, provided it's JSON-serializable (so you can't return a jQuery object, for example). For example, the following code returns the list of towns in the Django fixture syntax:

Scraper functions can always access the version of jQuery bundled with pjscrape (currently v.1.6.1). If you're scraping a site that also uses jQuery, and you want the latest features, you can set

noConflict: trueand use the_pjs.$variable:

Asynchronous Scraping

Docs coming soon. For now, see:

- Test for the ready option - wait for a ready condition before starting the scrape.

- Test for asyncronous scrapes - scraper function is expected to set

_pjs.itemswhen its scrape is complete.

Crawling Multiple Pages

Github Web Scraping Challenge

Github Web Template

Docs coming soon - the main thing is to set the moreUrls option to either a function or a selector that identifies more URLs to scrape. For now, see: